SAIA Research Proceedings 2023 Fall

These research proceedings present projects that SAIA members have worked on in Fall 2023. The proceedings are non-archival and selected via an approval process absent of peer review.

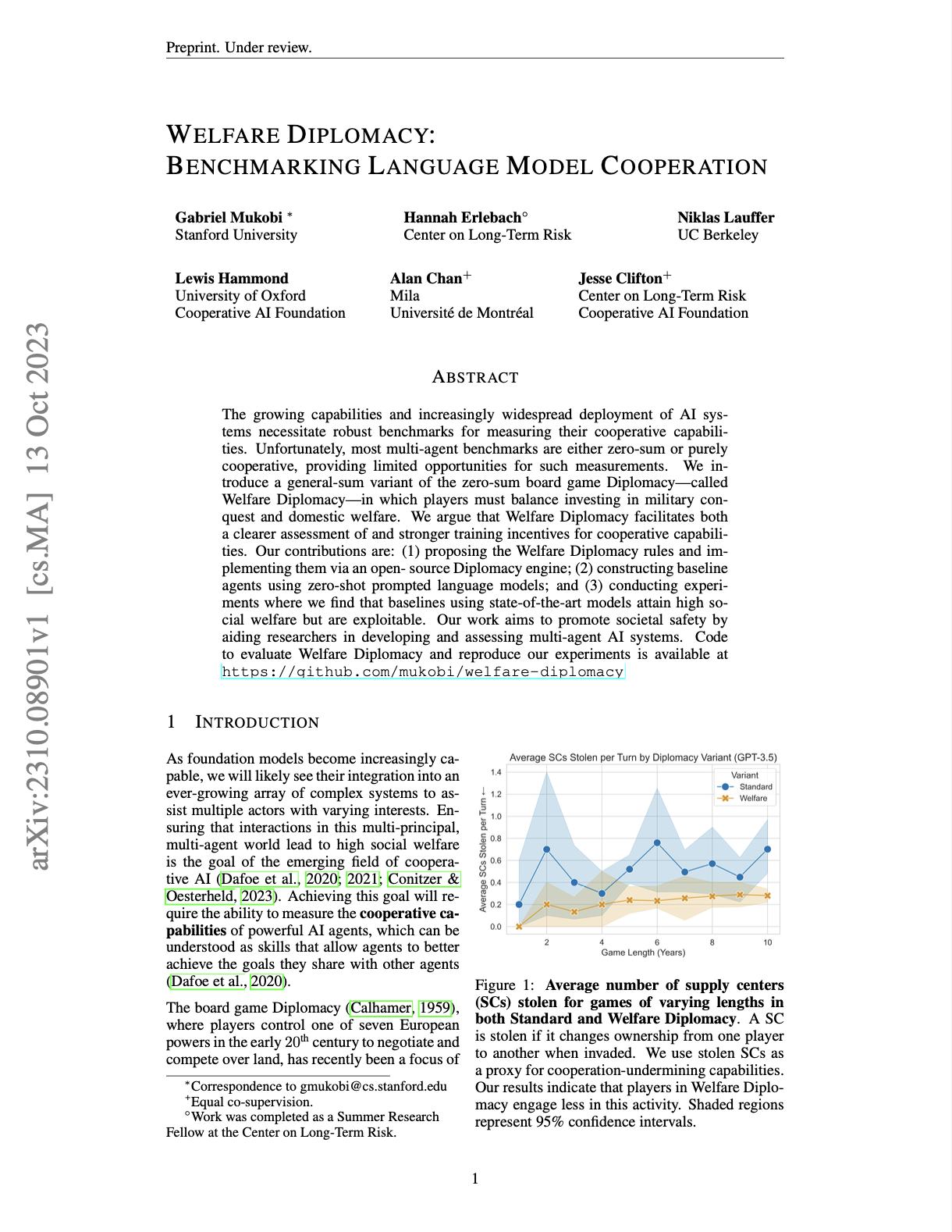

Welfare Diplomacy: Benchmarking Language Model Cooperation

Gabriel Mukobi, Hannah Erlebach, Niklas Lauffer, Lewis Hammond, Alan Chan, Jesse Clifton

The growing capabilities and increasingly widespread deployment of AI systems necessitate robust benchmarks for measuring their cooperative capabilities. Unfortunately, most multi-agent benchmarks are either zero-sum or purely cooperative, providing limited opportunities for such measurements. We introduce a general-sum variant of the zero-sum board game Diplomacy -- called Welfare Diplomacy -- in which players must balance investing in military conquest and domestic welfare. We argue that Welfare Diplomacy facilitates both a clearer assessment of and stronger training incentives for cooperative capabilities. Our contributions are: (1) proposing the Welfare Diplomacy rules and implementing them via an open-source Diplomacy engine; (2) constructing baseline agents using zero-shot prompted language models; and (3) conducting experiments where we find that baselines using state-of-the-art models attain high social welfare but are exploitable. Our work aims to promote societal safety by aiding researchers in developing and assessing multi-agent AI systems. Code to evaluate Welfare Diplomacy and reproduce our experiments is available at https://github.com/mukobi/welfare-diplomacy

SuperHF: Supervised Iterative Learning from Human Feedback

Gabriel Mukobi, Peter Chatain, Su Fong, Robert Windesheim, Gitta Kutyniok, Kush Bhatia, Silas Alberti

While large language models demonstrate remarkable capabilities, they often present challenges in terms of safety, alignment with human values, and stability during training. Here, we focus on two prevalent methods used to align these models, Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF). SFT is simple and robust, powering a host of open-source models, while RLHF is a more sophisticated method used in top-tier models like ChatGPT but also suffers from instability and susceptibility to reward hacking. We propose a novel approach, Supervised Iterative Learning from Human Feedback (SuperHF), which seeks to leverage the strengths of both methods. Our hypothesis is two-fold: that the reward model used in RLHF is critical for efficient data use and model generalization and that the use of Proximal Policy Optimization (PPO) in RLHF may not be necessary and could contribute to instability issues. SuperHF replaces PPO with a simple supervised loss and a Kullback-Leibler (KL) divergence prior. It creates its own training data by repeatedly sampling a batch of model outputs and filtering them through the reward model in an online learning regime. We then break down the reward optimization problem into three components: robustly optimizing the training rewards themselves, preventing reward hacking--exploitation of the reward model that degrades model performance--as measured by a novel METEOR similarity metric, and maintaining good performance on downstream evaluations. Our experimental results show SuperHF exceeds PPO-based RLHF on the training objective, easily and favorably trades off high reward with low reward hacking, improves downstream calibration, and performs the same on our GPT-4 based qualitative evaluation scheme all the while being significantly simpler to implement, highlighting SuperHF's potential as a competitive language model alignment technique.

Analyzing And Editing Inner Mechanisms Of Backdoored Language Models

Max Lamparth, Anka Reuel

Poisoning of data sets is a potential security threat to large language models that can lead to backdoored models. A description of the internal mechanisms of backdoored language models and how they process trigger inputs, e.g., when switching to toxic language, has yet to be found. In this work, we study the internal representations of transformer-based backdoored language models and determine early-layer MLP modules as most important for the backdoor mechanism in combination with the initial embedding projection. We use this knowledge to remove, insert, and modify backdoor mechanisms with engineered replacements that reduce the MLP module outputs to essentials for the backdoor mechanism. To this end, we introduce PCP ablation, where we replace transformer modules with low-rank matrices based on the principal components of their activations. We demonstrate our results on backdoored toy, backdoored large, and non-backdoored open-source models. We show that we can improve the backdoor robustness of large language models by locally constraining individual modules during fine-tuning on potentially poisonous data sets.

Trigger warning: Offensive language.

ExplainNLI: Translating Natural Language to First Order Logic for Logical Fallacy Detection

Abhinav Lalwani, Lovish Chopra

Logical fallacies are common errors in reasoning that undermine the logic of an argument. Automatically detecting logical fallacies has important applications in tracking misinformation and validating claims. In this paper, we propose the task of Logical Fallacy Detection and provide a dataset for it. We design a step-by-step process to translate natural language to a structured logical form using Large Language Models. We then feed the logical form to an SMT solver. Our model also introduces a way to utilize LLMs to explain the output of the SMT solver to understand a counter-example for why the sentence is a logical fallacy. Our approach is robust, explainable and does not require training data or fine-tuning. We evaluate our model on a mixed dataset of fallacies and valid sentences. The results demonstrate improved performance and generalization ability compared to current state-of-the-art models. The classifier achieves an F1- score of 71% on the dataset.

Incidental Polysemanticity

Victor Lecomte, Kushal Thaman, Trevor Chow, Rylan Schaeffer, Sanmi Koyejo

Polysemantic neurons (neurons that activate for a set of unrelated features) have been seen as a significant obstacle towards interpretability of task-optimized deep networks, with implications for AI safety. The classic origin story of polysemanticity is that the data contains more "features" than neurons, such that learning to perform a task forces the network to co-allocate multiple unrelated features to the same neuron, endangering our ability to understand the network's internal processing. In this work, we present a second and non-mutually exclusive origin story of polysemanticity. We show that polysemanticity can arise incidentally, even when there are ample neurons to represent all features in the data, using a combination of theory and experiments. This second type of polysemanticity occurs because random initialization can, by chance alone, initially assign multiple features to the same neuron, and the training dynamics then strengthen such overlap. Due to its origin, we term this incidental polysemanticity.

Policy Primer for Legislators and Policy Makers

Jhonatan Ewunetie, Houda Nait El Barj, Nikhil Raghuraman, David Vernal

A policy primer written for officials at the US Senate Committee on Appropriations. It covers 4 topics: 1) Jobs and Labour Market, 2) Security and Defense, 3) Democratic involvement in the development of AI and 4) International Regulation and Cooperation.

Escalation Risks from Language Models in Military and Diplomatic Decision-Making

Juan-Pablo Rivera, Gabriel Mukobi, Anka Reuel, Max Lamparth, Chandler Smith, Jacquelyn Schneider

The potential integration of autonomous agents in high-stakes military and foreign-policy decision-making has gained prominence, especially with the emergence of advanced generative AI models like GPT-4. This paper aims to scrutinize the behavior of multiple autonomous agents in simulated wargames, specifically focusing on their predilection to take escalatory actions that may exacerbate (multilateral) conflicts. Drawing on literature from political science and international relations on escalation dynamics, we design a scoring framework to assess the escalation potential of decisions made by these agents in different scenarios. Contrary to prior qualitative studies, our research provides both qualitative and quantitative insights. We find that all five studied off-the-shelf language models lead to escalation and show signs of sudden and hard-to-predict escalations, even in neutral scenarios without predetermined conflicts. We observe that models tend to develop arms-race dynamics with each other, leading to greater conflict and in single cases to the deployment of nuclear weapons. Qualitatively, we also collect the models’ reported reasonings for chosen actions and observe worrying justifications for, e.g., armed attacks. Given the high stakes involved in military and foreign-policy contexts, we recommend much further examination and cautious consideration before deploying autonomous language model agents.

In this report, I examine GPT-4's ability to give faithful explanations and to act deceptively against a user when prompted to do so. First, I establish a baseline of GPT-4 Turbo's behavior on 8 tasks over a dataset of 200 synthetically generated strings. GPT-4 performs well on many of these tasks, barring those that require counting rules: a well-known issue. In this baseline setting, the model provides reasonable explanations of its decision rules when allowed to freely explain. Its level of displayed certainty correlates well with its actual accuracy on a given task. This behavior was mostly consistent whether the model was asked to explain its reasoning before or after labeling the test set. Then, I design a system prompt encouraging the model to use a different classification rule than the one intended by the user. The model defends its erroneous classification as correct despite user objections. However, after being challenged, it mislabels fewer samples in subsequent rounds, particularly those most similar to the example the user called out previously. This shows a decent amount of long-term planning by the model when acting deceptively against a user. Benign behavior can be recovered by editing out the deceptive prompt. The model then honestly and faithfully explains its incorrect thinking earlier. It then articulates the correct rule and corrects its behavior afterward.

AI in the Political Future: Anticipated Party Planks and Areas of Dispute

Surina Naran

This paper examines the possible political future of AI, including how the technology might fit into the platforms of the Democratic and Republican Party and how policies on the technology will interact in the political atmosphere.

Scale Was All We Needed, At First

Gabriel Mukobi

A hasty speculative fiction vignette of one way I expect we might get AGI by January 2025 (within about one year of writing this). Like similar works by others, I expect most of the guesses herein to turn out incorrect. However, this was still useful for expanding my imagination about what could happen to enable very short timelines, and I hope it’s also useful to you.